Made in the 1980s, the Tektronix 1230 is a general purpose logic analyzer that supports a maximum of 64 channels with up to 2048 bytes of memory per channel. Despite being huge and heavy compared to today's tiny and portable equivalents (such as the

Saleae USB logic analyzer), the 1230 certainly still has its place nowadays, for example to debug older 8-bit designs such as Z80 systems, or simply as an educational tool in a digital electronics class.

I got mine from eBay, still in good condition after all these years. The CRT is working well and bright, with no burned-in marks that are typical of old CRTs:

The device comes with a Centronics parallel port and a DB25 RS232 serial port at the back:

The parallel port supports printing to certain Epson-compatible printer models manufactured in the 1980s. The DB25 (not DB9 like most serial ports found on modern devices) serial port is for communication with the PC using a proprietary MS-DOS application, which is nowhere to be found nowadays. The pinout of the serial port can be found in the notes page of the serial port settings:

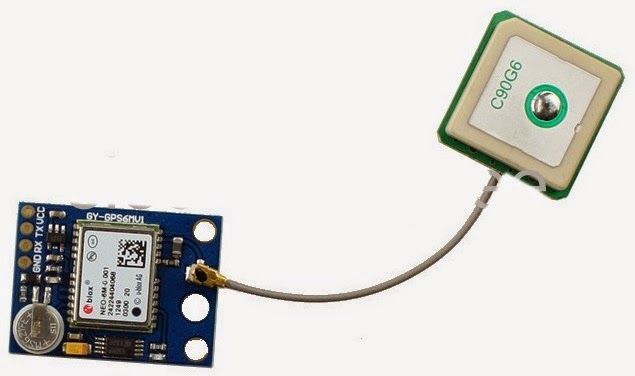

ProbesThe device has sockets to connect up to 4 probes, for a maximum of 64 input channels. Tektronix P6444/P6443 probes are supported. Both types of probes are almost identical, with P6444 being active whereas P6443 is a passive probe. My unit did not come with any probes so I had to purchase a P6444 probe from eBay:

The probe has the following control pins: EXT, CLK 1, CLK 2, QUAL as well as input pins D0-D15 for channels 0 to 15. The CLK pins are only needed if the logic analyzer is configured to use a synchronous clock, in which case CLK 1/CLK 2 will decide when the logic analyzer begins to capture signal samples. Whether the trigger is done on a rising edge or a falling edge is decided by the CLK 1/CLK 2 DIP switches in the centre of the probe box.

The QUAL pin is for signal qualification (enabled via the QUAL OFF/QUAL ON DIP switches). Its operation is described in the manual of the Tektronix 1240, a later but similar model:

I leave it as an exercise for the reader to experiment with the qualifier settings and understand how they actually work after reading this article.

Main menuThe unit boots up to the main menu, divided into 3 different categories: Setup, Data and Utility:

The Utility menu group contains device time and parallel/serial port settings. It also provides options to save the current setup to be restored later. Important settings that control the data acquisition behaviour are found in the Setup and Data menu groups.

Although the time settings allow years between 1900-2099, the year would jump back to 1914 even if 2014 is selected after a reboot. Some sort of Y2K issues, I believe.

Pressing the NOTES key on any screen will show the instruction text for that screen. To print a screenshot of the current screen, double pressing the NOTES key. Pressing the D key while in the Printer Port menu will print the contents of the currently active memory bank.

Timebase configuration The Timebase menu allows you to set the type of timebase for each probe (synchronous/asynchronous), the rate of sampling (for asynchronous timebase), and the voltage threshold for low/high signals. The default threshold is 1.4V, which means that any signal above 1.4V will be considered as logic high. With this setting, the logic analyzer supports both TTL and CMOS signals.

Channel group configurationThe Channel Groups menu allows you to configure the the grouping of different input channels:

The interface is not user-friendly at all here, but that is typical for a machine of this era, isn't it? The display shows several channel groups (GPA, GPB, GPC, etc.), with each channel supporting binary (BIN), octal (OCT) or hexadecimal (HEX) radix. The channel definition strings have several lines showing which channels in which probes belong to the specified channel groups. The first line is the probe name (A, B, C or D) and the next 2 lines are the channel number (00 to 15). For example, in the above screenshot, channel group GPA is in binary format, uses timebase T1 with positive polarity and contains channels 00 to 15 in probe A.

Trigger configurationThe Trigger menu defines the conditions of the input signal which, if met, will cause the logic analyzer to start capturing samples:

The above display means: if value A occurs 1 times, start capturing the data and fill the sample memory. Moving the cursor to the Condition ("A") field allows you to configure how the value is evaluated:

This is perhaps the most complicated screen in this logic analyzer. Further information is available in the device's help page for the screen.

Data acquisition configurationThe logic analyzer has 4 memory banks, each holding up to 2048 data points. It has two display modes for captured data: timing and state. In timing mode, signal levels (low/high) are displayed. In state mode, values of 0 or 1 as captured, or if configured, their hexadecimal, octal or ASCII equivalents, are displayed.

The Run Control menu allows you to configure how the input data will be captured and displayed, such as which memory bank (1-4) to be used for sample storage and the default display mode to be shown after the signal has been captured.

The Mem Select menu allows you to select the active memory bank. It also shows a summary of the current timebase settings:

Timing and state diagramAfter setting the necessary configurations, press the START button to start capturing the input signals. The logic analyzer will proceed to wait for the trigger conditions to be met. To stop waiting, press the STOP button.

Once the trigger conditions are met, the device will start to capture the signals until its memory is full and show the signal timing diagram (or the state diagram if configured in the Run Control menu):

You can scroll between the captured samples using the arrow keys, or zoom in or out by pressing F, followed by 4 or 5 to change the resolution. The following shows the timing diagram when zoomed out:

Below is the state diagram of the captured signal, when viewed in binary mode:

The radix can be changed to octal or hexadecimal by pressing 2:

ASCII data captureInterestingly, the radix of the state diagram can also be changed to

ASCII. To test this, I wrote a PIC program to output all characters of the ASCII string "Hello World" to PORTB of a PIC, with sufficient delay after each character. I then connected the probe channels to the output pins (RB0-RB7) and captured the output data. The following is the result when asynchronous timebase is used for capturing:

Although characters such as 'o', 'd', 'H', 'r', which apparently come from the original "Hello world" string, can be seen, they are not in order, with some characters appearing more than once. This is explained by the fact that the clock is asynchronous and different from the rate at which the output at PORTB is changed, resulting in wrongly sampled data.

To improve the display, I used another pin on the PIC to indicate when the output value changes. This pin will remain low for most of the time and will only be set to high for a short duration whenever the output value on PORTB is changed to a different character. I then connected this pin to the CLK pin on the probe, and set the timebase to synchronous. After capturing the signal again, this is the output screen:

Here "SP" stands for "space" (ASCII code 32). "Hello world" can now be seen clearly in the output, with characters in order and not repeated.

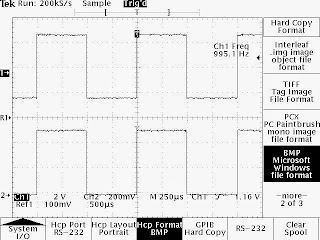

Capturing narrow pulsesOut of curiosity, I decided to test how fast a signal this logic analyzer can capture. This can be done by writing a PIC program to toggle an output pin at a fast rate, and trying to capture that signal. In my tests, the shortest pulse that the logic analyzer can capture is around 80ns:

This is the corresponding display of the same signal on a Rigol DS1052E oscilloscope:

With these tests, I guess the highest signal frequency that the 1230 can reliably work with is around 10-15MHz. Faster signals may not be captured properly due to slow sampling rates and lack of available memory.

Interestingly, although the rate of the asynchronous clock can be set to 10nS or 20 nS, only half the usual channel memory will be available in this configuration, causing the channel groups and trigger conditions to be automatically modified to exclude channels that are unavailable. Fortunately, the 1230 will prompt you about this before making the changes:

Add-on cardsThe 1230 can also act as a digitizing oscilloscope and show the actual signal waveform with an appropriate add-on card. The following is the screen output when such a module is installed:

With the appropriate add-on cards installed, the 1230 can also disassemble instructions for the Z80/8085/68K processsors or decode the RS232 protocol using the Disassembly menu.

Unfortunately my unit does not come with any add-on cards and none of these cards can be found on eBay nowadays. Therefore, selecting the Disassembly menu will just display an error message saying "Disassembly requires personality module".

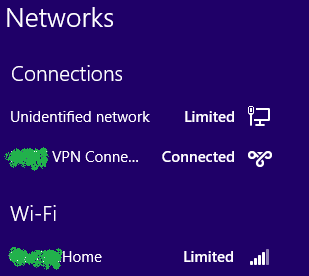

Data printoutNot surprisingly, getting this logic analyzer to print its screenshot or memory contents is a challenge nowadays, as the only supported printing method is via an Epson-compatible printer through a parallel port, which has disappeared from most desktop computers ever since the introduction of USB. To workaround this, I have developed a tool which uses a PIC24 to emulate a parallel port printer and stores the printout onto an SD card. The printout can later be converted to a bitmap image (.BMP) by using a Windows program.

This is the completed tool when assembled on a stripboard using a ST7735 LCD to display output messages:

See this

article for the full source code and other details about the tool.

Most of the screenshots from the logic analyzer in this article were captured using this tool. The same tool can also be used to capture the device memory contents by pressing the D key while in the Printer Port menu. The output looks like below:

Memory | Range is 0000 to 1023 | Timebase 1 | sync 10 uS

Loc GPA

bin

0000 10001000

0001 10001000

0002 01110111

0003 01110111

0004 01110111

0005 01110111

0006 10001000

The 1230 prints its screenshots as graphics but prints its memory as text. In text mode, Epson escape codes are used to support simple text formatting (e.g. bold). The Windows software I developed can only convert the graphics output to a BMP file. For the memory printout, you can simply read the output file directly using any text editor - most will remove the escape codes (ASCII code < 32) automatically.

Composite video outputThere is a BNC socket, marked as "Video Out", at the back of the logic analyzer. To test the video output, I salvaged a BNC connector from an old oscilloscope probe and made a BNC to RCA adapter:

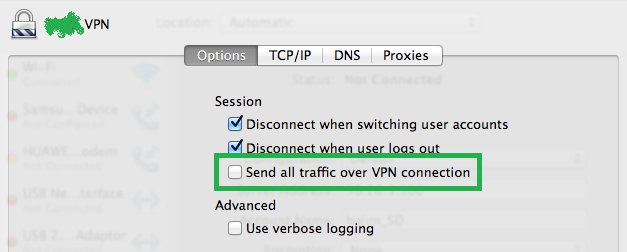

This is the video signal shown on my oscilloscope:

![]()

<

![]()

The signal clearly resembles a monochrome composite PAL signal, albeit with a high peak-to-peak voltage (2.5V). It displays well on my old CRT TV:

And on my 21" LCD monitor, with the help of a composite-to-VGA converter:

There are some distortions in the video display, with the bottom and top of the display cut off. This may be due to noises in the video cable or limitations of the video output capabilities.

Probe teardownAfter testing the overall functionality of the logic analyzer, I decided to perform a teardown of the probe to see its internal components. This is the front and the back of the probe's circuit board:

Apart from some Tek proprietary components such as TEK 165 2304 01, there are also quite a few 74-series ICs and some MC10H350P PECL to TTL translators. Except for the processing unit in the center of the board, no other ICs are socketed, making it hard to repair if there are issues.

Other informationThe only useful information I found of this logic analyzer on the Internet is from an old brochure, downloadable from

here. It contains basic technical specifications of the 1230 and some information on the different types of supported add-on cards.

The following Youtube videos, probably converted from the original VHS training tapes made by Tektronix, are also useful:

Tektronix 1230 training (part 1)Tektronix 1230 training (part 2)See also my previous article on emulating a parallel port printer to capture the print output from this logic analyzer (and other similar equipments):

Capturing data from a Tektronix 1230 logic analyzer by emulating a parallel port printer

.png)